Introduction

In the previous articles, we created:

- A temperature and humidity sensor which transmits its values into an influx database.

- An ansible script which installs influxdb and grafana docker containers on a host to store and visualize sensor data.

The resulting infrastructure serves its purpose but has few drawbacks:

- The host is a single point of failure and there are no self-healing capabilities.

- We use unencrypted HTTP.

- It is not possible to scale out in case of higher workloads.

To resolve these issues, this article explains how to run InfluxDB and Grafana in an AWS kubernetes cluster. We will cover the following features:

- Spawn a k8s cluster in AWS using its EKS feature. Use CloudFormation to describe the cluster via YAML as an “Infrastructure as Code” approach.

- Run Grafana and InfluxDB as StatefulSets inside the cluster.

- Both should use Amazon Elastic Block Storage (EBS) to store application data.

- Install an Application Load Balancer (ALB) which listens to HTTPS and uses a proper certificate.

Architecture

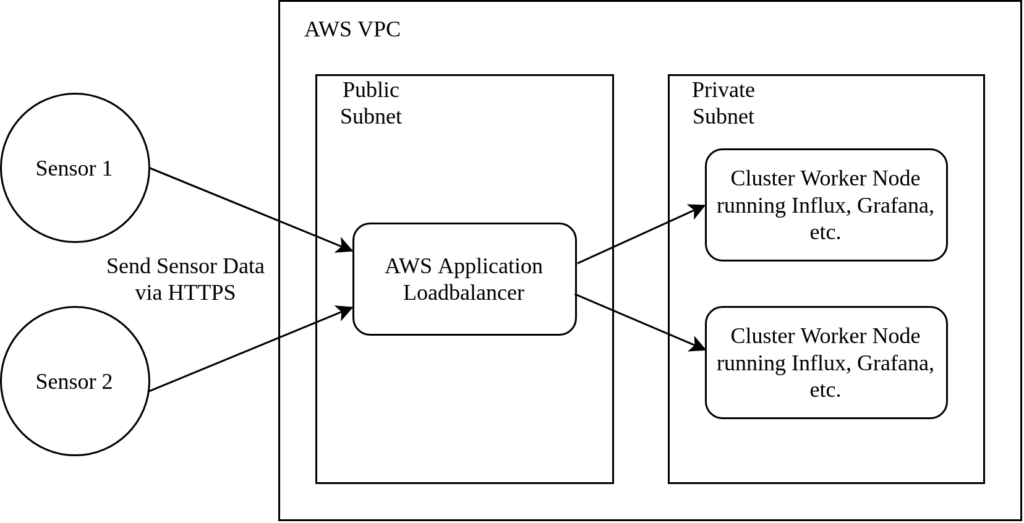

Our solution will have the following structure:

The temperature/humidity sensors transmit their data via HTTPS to a load balancer. The load balancer is part of a public subnet in an AWS Virtual Private Cloud (VPC). The kubernetes cluster is hidden in a private subnet and only accessible by the load balancer.

To implement these requirements, we need the following components in AWS:

- A Kubernetes Cluster based on Elastic Kubernetes Service (EKS)

- Elastic Block Storage for persistence

- Application Load Balancer to provide HTTPS and to forward requests to the correct container instance based on their URL

Source Code

The projects source code is available here on Github. It contains scripts and documentation to spin up a cluster in AWS. Furthermore, the repository provides kubernetes yaml files to deploy the applications, load balancer and persistent storage in the cluster.

Cloudformation

Before we can implement our kubernetes cluster, we have to discuss how it is deployed in AWS. An EKS cluster can be created manually with the AWS web management console. As an alternative, cloudformation is available. It allows us to describe AWS infrastructure with a YAML file. The advantages are:

- It also serves as documentation and can be put under version control

- You can easily spawn (and delete) several copies of your infrastructure without manual labour.

Below is an example to demonstrate the approach:

---

AWSTemplateFormatVersion: '2010-09-09'

Description: 'Create a VPC'

Parameters:

VpcBlock:

Type: String

Default: 192.168.0.0/16

Description: The CIDR range for the VPC. This should be a valid private (RFC 1918) CIDR range.

Resources:

VPC:

Type: AWS::EC2::VPC

Properties:

CidrBlock: !Ref VpcBlock

EnableDnsSupport: true

EnableDnsHostnames: true

Tags:

- Key: Name

Value: !Sub '${AWS::StackName}-VPC'

The snippet creates an empty VPC. The VPC CIDR block to describe its IP range can be passed via parameters to increase the templates flexibility. The VPC name is generated dynamically based on the stack name. The following shell script creates an instance of the template:

aws cloudformation create-stack \

--region eu-central-1 \

--stack-name example-stack \

--template-body file://"my-template.yaml"

The command specifies a region, the stacks name (referenced in the template to generate the VPC name) and the cloudformation input file. Afterwards, it takes up to several minutes until the stack is generated (depending on its size). In the next chapter, we will use cloudformation to specify an AWS kubernetes cluster.

Cluster COmponents

This sections presents the components we have to describe in our cloud formation template. The full template and its details can be found here.

VPC

Many resources in AWS including kubernetes cluster are placed in a “Virtual Private Cloud” (VPC). It can be seen as a virtual network and consists of subnets. A subnet is either public or private and hosted in a single AWS accessibility zone. Public subnets are internet facing and contain an internet gateway. Private subnets are not accessible from the internet but can communicate with internet resources via a NAT gateway. Each subnet can contain its own route table. In our case, we define public and private route tables to make sure the traffic is forwarded correctly to the NAT and the internet gateway. To run our EKS cluster, we need the following subnets:

- Two public subnets for the application load balancer. One would be sufficient but the ALB has to run in two accessibility zones.

- A single private subnet for the EKS cluster including its EC2 worker nodes.

With this topology we make sure that the cluster is protected against malicious connection attempts and can only be reached through the application load balancer.

EKS

Typically, a cluster in AWS consists of:

- Control planes which manage the cluster

- Worker nodes which run your application pods, deployments, etc

AWS manages the control plane. All we have to do is to configure a few parameters (e.g. select the right VPC subnets).

The worker nodes can be handled in two different ways: You can either run EC2 instances or use AWS Fargate. When using EC2, we spawn virtual machines, configure and manage them by our own. Fargate on the other hand is a serverless compute engine service fully managed by AWS which can be used to execute containers. In this article, we will use EC2 approach since it gives us a little bit more control.

Below is the cloudformation snippet:

EKSControlPlane:

Type: "AWS::EKS::Cluster"

Properties:

ResourcesVpcConfig:

SecurityGroupIds:

- !Ref ControlPlaneSecurityGroup

SubnetIds:

- !Ref PublicSubnet01

- !Ref PublicSubnet02

- !Ref PrivateSubnet01

RoleArn: !GetAtt EksClusterRole.Arn

Version: !Ref KubernetesVersion

Name: !Sub '${AWS::StackName}-eks-cluster'

EksWorkerNodes:

DependsOn: EKSControlPlane

Type: AWS::EKS::Nodegroup

Properties:

ClusterName: !Sub '${AWS::StackName}-eks-cluster'

InstanceTypes:

- !Ref EksInstanceType

NodegroupName: !Sub '${AWS::StackName}-eks-node-group'

NodeRole: !GetAtt EksNodeRole.Arn

ScalingConfig:

MinSize: 1

MaxSize: 4

DesiredSize: 1

Subnets:

- !Ref PrivateSubnet01

Access Management

We define the roles “EksClusterRole” and “EksNodeRole” which are attached to the control planes and the worker nodes. Each role links a set of managed policies created by AWS for us to the corresponding components. This way, we set permission to access the subnets, the container registry, etc. Without these permissions, the cluster would not work properly.

The cloudformation code looks like this:

EksClusterRole:

Type: AWS::IAM::Role

Properties:

AssumeRolePolicyDocument:

Version: "2012-10-17"

Statement:

- Effect: Allow

Principal:

Service:

- eks.amazonaws.com

Action:

- 'sts:AssumeRole'

Description: Role to provide access to EKS

ManagedPolicyArns:

- arn:aws:iam::aws:policy/AmazonEKSClusterPolicy

RoleName: !Sub '${AWS::StackName}-AmazonEKSClusterRole'

EksNodeRole:

Type: AWS::IAM::Role

Properties:

AssumeRolePolicyDocument:

Version: "2012-10-17"

Statement:

- Effect: Allow

Principal:

Service:

- ec2.amazonaws.com

Action:

- 'sts:AssumeRole'

Description: Role to provide access to EKS

ManagedPolicyArns:

- arn:aws:iam::aws:policy/AmazonEKSWorkerNodePolicy

- arn:aws:iam::aws:policy/AmazonEC2ContainerRegistryReadOnly

- arn:aws:iam::aws:policy/AmazonEKS_CNI_Policy

RoleName: !Sub '${AWS::StackName}-AmazonEKSNodeRole'

Further Work

The blog article describes how to create a kubernetes cluster with AWS cloudformation. It contains a VPC, subnets, worker nodes and control planes. However, the following topics are not covered yet:

- Spawn an application load balancer.

- Deploy InfluxDB and Grafana in the cluster.

- Attach persistent storage to both services.

- Attach a SSL certificate to allow HTTPS traffic.

These topics will be presented in further blog articles.